v1-2022.4.24-实战-Helm方式安装ingress-nginx(测试成功)

更新于:2024年3月8日

v1-2022.4.24-实战-Helm方式安装ingress-nginx(测试成功)

目录

[toc]

环境

- 实验环境

实验环境:

1、win10,vmwrokstation虚机;

2、k8s集群:3台centos7.6 1810虚机,1个master节点,2个node节点

k8s version:v1.22.2

containerd://1.5.5

3、helm:v3.7.2

4、ingress-nginx:v4.1.0

- 实验软件

链接:https://pan.baidu.com/s/1WbnzTI3II7X3jGyCKDN3GQ?pwd=83ol

提取码:83ol

2022.4.26-实验软件-《实战:ingress-nginx安装》-阳总-2022.4.26(博客分享)

前置条件

- k8s集群已存在

- k8s集群里安装了helm

1、helm方式安装Ingress-nginx

这里我们使用 Helm Chart的方式来进行安装:

- 关于helm如何安装,请查看我的文档:

实战:helm包管理-2022.4.4:https://blog.csdn.net/weixin_39246554/article/details/123955289

- 下载ingress-nginx charts包

➜ helm repo add ingress-nginx https://kubernetes.github.io/ingress-nginx

➜ helm repo update

➜ helm fetch ingress-nginx/ingress-nginx

➜ tar -xvf ingress-nginx-4.1.0.tgz && cd ingress-nginx

➜ tree .

.

├── CHANGELOG.md

├── Chart.yaml

├── OWNERS

├── README.md

├── ci

│ ├── controller-custom-ingressclass-flags.yaml

│ ├── daemonset-customconfig-values.yaml

│ ├── daemonset-customnodeport-values.yaml

│ ├── daemonset-headers-values.yaml

│ ├── daemonset-internal-lb-values.yaml

│ ├── daemonset-nodeport-values.yaml

│ ├── daemonset-podannotations-values.yaml

│ ├── daemonset-tcp-udp-configMapNamespace-values.yaml

│ ├── daemonset-tcp-udp-values.yaml

│ ├── daemonset-tcp-values.yaml

│ ├── deamonset-default-values.yaml

│ ├── deamonset-metrics-values.yaml

│ ├── deamonset-psp-values.yaml

│ ├── deamonset-webhook-and-psp-values.yaml

│ ├── deamonset-webhook-values.yaml

│ ├── deployment-autoscaling-behavior-values.yaml

│ ├── deployment-autoscaling-values.yaml

│ ├── deployment-customconfig-values.yaml

│ ├── deployment-customnodeport-values.yaml

│ ├── deployment-default-values.yaml

│ ├── deployment-headers-values.yaml

│ ├── deployment-internal-lb-values.yaml

│ ├── deployment-metrics-values.yaml

│ ├── deployment-nodeport-values.yaml

│ ├── deployment-podannotations-values.yaml

│ ├── deployment-psp-values.yaml

│ ├── deployment-tcp-udp-configMapNamespace-values.yaml

│ ├── deployment-tcp-udp-values.yaml

│ ├── deployment-tcp-values.yaml

│ ├── deployment-webhook-and-psp-values.yaml

│ ├── deployment-webhook-resources-values.yaml

│ └── deployment-webhook-values.yaml

├── templates

│ ├── NOTES.txt

│ ├── _helpers.tpl

│ ├── _params.tpl

│ ├── admission-webhooks

│ │ ├── job-patch

│ │ │ ├── clusterrole.yaml

│ │ │ ├── clusterrolebinding.yaml

│ │ │ ├── job-createSecret.yaml

│ │ │ ├── job-patchWebhook.yaml

│ │ │ ├── psp.yaml

│ │ │ ├── role.yaml

│ │ │ ├── rolebinding.yaml

│ │ │ └── serviceaccount.yaml

│ │ └── validating-webhook.yaml

│ ├── clusterrole.yaml

│ ├── clusterrolebinding.yaml

│ ├── controller-configmap-addheaders.yaml

│ ├── controller-configmap-proxyheaders.yaml

│ ├── controller-configmap-tcp.yaml

│ ├── controller-configmap-udp.yaml

│ ├── controller-configmap.yaml

│ ├── controller-daemonset.yaml

│ ├── controller-deployment.yaml

│ ├── controller-hpa.yaml

│ ├── controller-ingressclass.yaml

│ ├── controller-keda.yaml

│ ├── controller-poddisruptionbudget.yaml

│ ├── controller-prometheusrules.yaml

│ ├── controller-psp.yaml

│ ├── controller-role.yaml

│ ├── controller-rolebinding.yaml

│ ├── controller-service-internal.yaml

│ ├── controller-service-metrics.yaml

│ ├── controller-service-webhook.yaml

│ ├── controller-service.yaml

│ ├── controller-serviceaccount.yaml

│ ├── controller-servicemonitor.yaml

│ ├── default-backend-deployment.yaml

│ ├── default-backend-hpa.yaml

│ ├── default-backend-poddisruptionbudget.yaml

│ ├── default-backend-psp.yaml

│ ├── default-backend-role.yaml

│ ├── default-backend-rolebinding.yaml

│ ├── default-backend-service.yaml

│ ├── default-backend-serviceaccount.yaml

│ └── dh-param-secret.yaml

└── values.yaml

4 directories, 81 files

Helm Chart 包下载下来后解压就可以看到里面包含的模板文件,其中的 ci 目录中就包含了各种场景下面安装的 Values 配置文件,values.yaml 文件中包含的是所有可配置的默认值,我们可以对这些默认值进行覆盖。

⚠️ 注意:

如果你不喜欢使用 helm chart 进行安装也可以使用下面的命令一键安装

kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.1.0/deploy/static/provider/cloud/deploy.yaml

2、创建自定义Values.yaml文件

- 然后新建一个名为

ci/daemonset-prod.yaml的 Values 文件,用来覆盖 ingress-nginx 默认的 Values 值。

注意:以下配置参数不是随便设置的,一定要是values.yaml里面有的才可以的哦;

# vim ci/daemonset-prod.yaml

# ci/daemonset-prod.yaml

controller:

name: controller

image:

repository: cnych/ingress-nginx #老师这里是转存过的。

tag: "v1.1.0"

digest:

dnsPolicy: ClusterFirstWithHostNet

hostNetwork: true

publishService: # hostNetwork 模式下设置为false,通过节点IP地址上报ingress status数据

enabled: false

# 是否需要处理不带 ingressClass 注解或者 ingressClassName 属性的 Ingress 对象

# 设置为 true 会在控制器启动参数中新增一个 --watch-ingress-without-class 标注

watchIngressWithoutClass: false

kind: Deployment

tolerations: # kubeadm 安装的集群默认情况下master是有污点,需要容忍这个污点才可以部署

- key: "node-role.kubernetes.io/master"

operator: "Equal"

effect: "NoSchedule"

nodeSelector: # 固定到master1节点

kubernetes.io/hostname: master1

service: # HostNetwork 模式不需要创建service

enabled: false

admissionWebhooks: # 强烈建议开启 admission webhook

enabled: true

createSecretJob:

resources:

limits:

cpu: 10m

memory: 20Mi

requests:

cpu: 10m

memory: 20Mi

patchWebhookJob:

resources:

limits:

cpu: 10m

memory: 20Mi

requests:

cpu: 10m

memory: 20Mi

patch:

enabled: true

image:

repository: cnych/ingress-nginx-webhook-certgen #老师做了镜像转存

tag: v1.1.1

digest:

defaultBackend: # 配置默认后端

enabled: true

name: defaultbackend

image:

repository: cnych/ingress-nginx-defaultbackend #老师做了镜像转存

tag: "1.5"

3、部署

- 然后使用如下命令安装

ingress-nginx应用到ingress-nginx的命名空间中:

[root@master1 ingress-nginx]#helm upgrade --install ingress-nginx . -f ./ci/daemonset-prod.yaml --create-namespace --namespace ingress-nginx #upgrade如果存在的话,我就迁移更新,不存在的话,我就去安装。

#这里我们耐心等待一会儿

Release "ingress-nginx" has been upgraded. Happy Helming!

NAME: ingress-nginx

LAST DEPLOYED: Tue Apr 26 21:05:04 2022

NAMESPACE: ingress-nginx

STATUS: deployed

REVISION: 2

TEST SUITE: None

NOTES:

The ingress-nginx controller has been installed.

It may take a few minutes for the LoadBalancer IP to be available.

You can watch the status by running 'kubectl --namespace ingress-nginx get services -o wide -w ingress-nginx-controller'

An example Ingress that makes use of the controller:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: example

namespace: foo

spec:

ingressClassName: nginx

rules:

- host: www.example.com

http:

paths:

- pathType: Prefix

backend:

service:

name: exampleService

port:

number: 80

path: /

# This section is only required if TLS is to be enabled for the Ingress

tls:

- hosts:

- www.example.com

secretName: example-tls

If TLS is enabled for the Ingress, a Secret containing the certificate and key must also be provided:

apiVersion: v1

kind: Secret

metadata:

name: example-tls

namespace: foo

data:

tls.crt: <base64 encoded cert>

tls.key: <base64 encoded key>

type: kubernetes.io/tls

4、验证

- 部署完成后查看 Pod 的运行状态:

[root@master1 ingress-nginx]#kubectl get pod -n ingress-nginx -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

ingress-nginx-controller-r5964 1/1 Running 0 8m2s 172.29.9.51 master1 <none> <none>

ingress-nginx-defaultbackend-84854cd6cb-8gzcm 1/1 Running 0 8m2s 10.244.1.197 node1 <none> <none>

[root@master1 ingress-nginx]#kubectl get svc -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller-admission ClusterIP 10.106.208.0 <none> 443/TCP 8m8s

ingress-nginx-defaultbackend ClusterIP 10.106.66.15 <none> 80/TCP 8m8s

- 查看下ingress-nginx pod的日志:

[root@master1 ingress-nginx]# POD_NAME=$(kubectl get pods -l app.kubernetes.io/name=ingress-nginx -n ingress-nginx -o jsonpath='{.items[0].metadata.name}')

[root@master1 ingress-nginx]#echo $POD_NAME

ingress-nginx-controller-r5964

[root@master1 ingress-nginx]#kubectl logs $POD_NAME -n ingress-nginx

-------------------------------------------------------------------------------

NGINX Ingress controller

Release: v1.1.0

Build: cacbee86b6ccc45bde8ffc184521bed3022e7dee

Repository: https://github.com/kubernetes/ingress-nginx

nginx version: nginx/1.19.9

-------------------------------------------------------------------------------

W0426 13:00:16.359192 7 client_config.go:615] Neither --kubeconfig nor --master was specified. Using the inClusterConfig. This might not work.

I0426 13:00:16.359981 7 main.go:223] "Creating API client" host="https://10.96.0.1:443"

I0426 13:00:16.387442 7 main.go:267] "Running in Kubernetes cluster" major="1" minor="22" git="v1.22.2" state="clean" commit="8b5a19147530eaac9476b0ab82980b4088bbc1b2" platform="linux/amd64"

I0426 13:00:16.400163 7 main.go:86] "Valid default backend" service="ingress-nginx/ingress-nginx-defaultbackend"

I0426 13:00:16.615214 7 main.go:104] "SSL fake certificate created" file="/etc/ingress-controller/ssl/default-fake-certificate.pem"

I0426 13:00:16.704300 7 ssl.go:531] "loading tls certificate" path="/usr/local/certificates/cert" key="/usr/local/certificates/key"

I0426 13:00:16.752208 7 nginx.go:255] "Starting NGINX Ingress controller"

I0426 13:00:16.785466 7 event.go:282] Event(v1.ObjectReference{Kind:"ConfigMap", Namespace:"ingress-nginx", Name:"ingress-nginx-controller", UID:"8e41333d-a6e7-47d6-a8e8-b1d0dab0fda7", APIVersion:"v1", ResourceVersion:"2336338", FieldPath:""}): type: 'Normal' reason: 'CREATE' ConfigMap ingress-nginx/ingress-nginx-controller

I0426 13:00:17.963766 7 store.go:424] "Found valid IngressClass" ingress="default/ghost" ingressclass="nginx"

I0426 13:00:17.965404 7 event.go:282] Event(v1.ObjectReference{Kind:"Ingress", Namespace:"default", Name:"ghost", UID:"b421eee9-26f3-43a2-8d07-08df3c9fd814", APIVersion:"networking.k8s.io/v1", ResourceVersion:"2321677", FieldPath:""}): type: 'Normal' reason: 'Sync' Scheduled for sync

I0426 13:00:18.055029 7 nginx.go:297] "Starting NGINX process"

I0426 13:00:18.055380 7 leaderelection.go:248] attempting to acquire leader lease ingress-nginx/ingress-controller-leader...

I0426 13:00:18.061064 7 status.go:84] "New leader elected" identity="ingress-nginx-controller-dm4bg"

I0426 13:00:18.061232 7 nginx.go:317] "Starting validation webhook" address=":8443" certPath="/usr/local/certificates/cert" keyPath="/usr/local/certificates/key"

I0426 13:00:18.062097 7 controller.go:155] "Configuration changes detected, backend reload required"

I0426 13:00:18.177454 7 controller.go:172] "Backend successfully reloaded"

I0426 13:00:18.177565 7 controller.go:183] "Initial sync, sleeping for 1 second"

I0426 13:00:18.177972 7 event.go:282] Event(v1.ObjectReference{Kind:"Pod", Namespace:"ingress-nginx", Name:"ingress-nginx-controller-r5964", UID:"edd71a4c-5f9d-4b3c-aa8e-b45ef67472ef", APIVersion:"v1", ResourceVersion:"2336371", FieldPath:""}): type: 'Normal' reason: 'RELOAD' NGINX reload triggered due to a change in configuration

I0426 13:00:57.356030 7 leaderelection.go:258] successfully acquired lease ingress-nginx/ingress-controller-leader

I0426 13:00:57.356251 7 status.go:84] "New leader elected" identity="ingress-nginx-controller-r5964"

当看到上面的信息证明 ingress-nginx 部署成功了,这里我们安装的是最新版本的控制器。

- 安装完成后会自动创建一个名为

nginx的IngressClass对象:

[root@master1 ingress-nginx]#kubectl get ingressclass

NAME CONTROLLER PARAMETERS AGE

nginx k8s.io/ingress-nginx <none> 12m

[root@master1 ingress-nginx]#kubectl get ingressclass nginx -oyaml

apiVersion: networking.k8s.io/v1

kind: IngressClass

metadata:

annotations:

meta.helm.sh/release-name: ingress-nginx

meta.helm.sh/release-namespace: ingress-nginx

creationTimestamp: "2022-04-26T13:00:15Z"

generation: 1

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.2.0

helm.sh/chart: ingress-nginx-4.1.0

name: nginx

resourceVersion: "2336359"

uid: 52bf2d88-a0d4-48e4-bb25-e07c7ae05375

spec:

controller: k8s.io/ingress-nginx

不过这里我们只提供了一个 controller 属性,如果还需要配置一些额外的参数,则可以在安装的 values 文件中进行配置。

5、第一个示例

- 安装成功后,现在我们来为一个 nginx 应用创建一个 Ingress 资源,如下所示:

# first-ingress.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-nginx

spec:

selector:

matchLabels:

app: my-nginx

template:

metadata:

labels:

app: my-nginx

spec:

containers:

- name: my-nginx

image: nginx

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: my-nginx

labels:

app: my-nginx

spec:

ports:

- port: 80

protocol: TCP

name: http

selector:

app: my-nginx

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: my-nginx

namespace: default

spec:

ingressClassName: nginx # 使用 nginx 的 IngressClass(关联的 ingress-nginx 控制器)

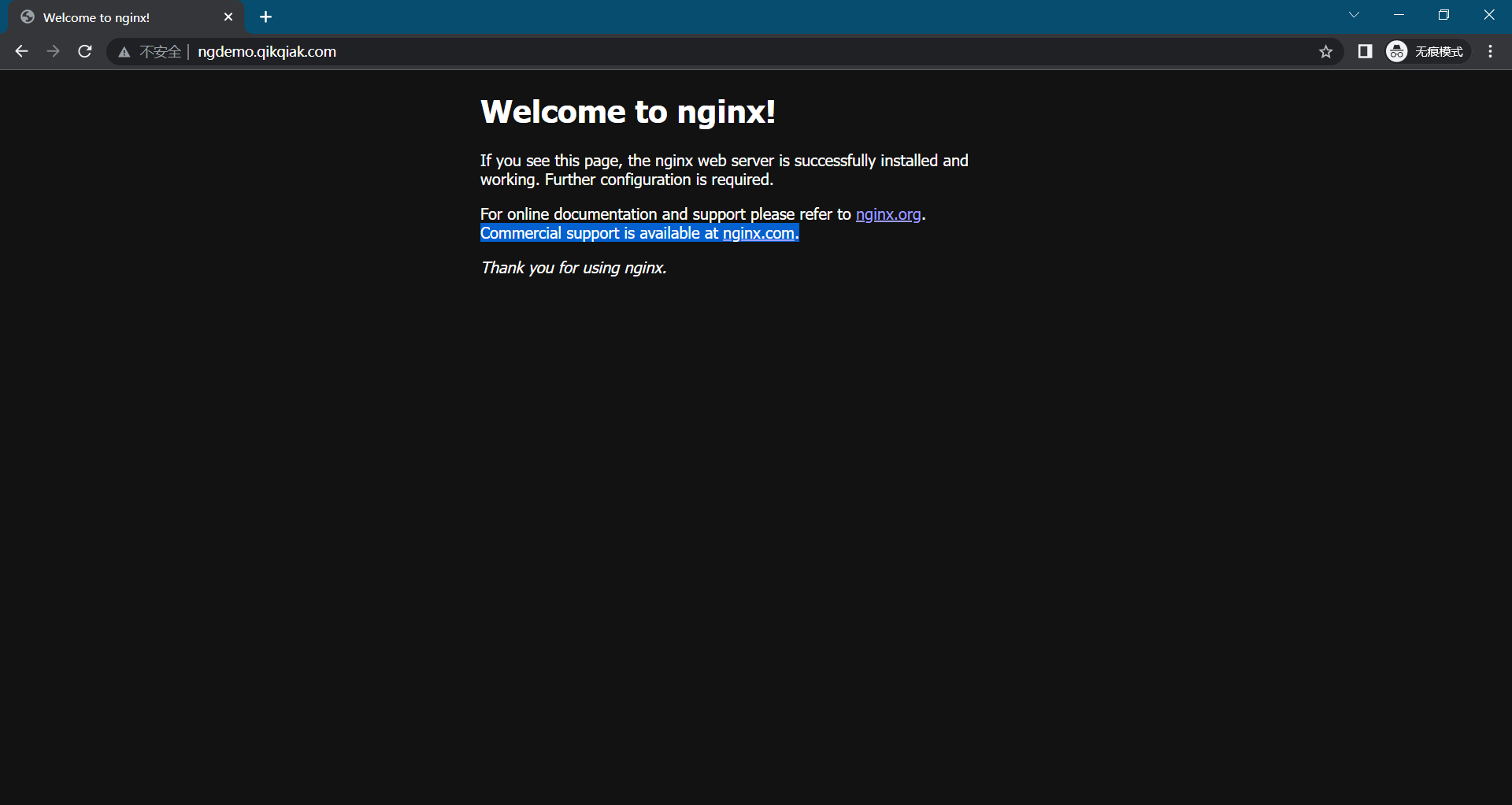

rules:

- host: ngdemo.qikqiak.com # 将域名映射到 my-nginx 服务

http:

paths:

- path: /

pathType: Prefix

backend:

service: # 将所有请求发送到 my-nginx 服务的 80 端口

name: my-nginx

port:

number: 80

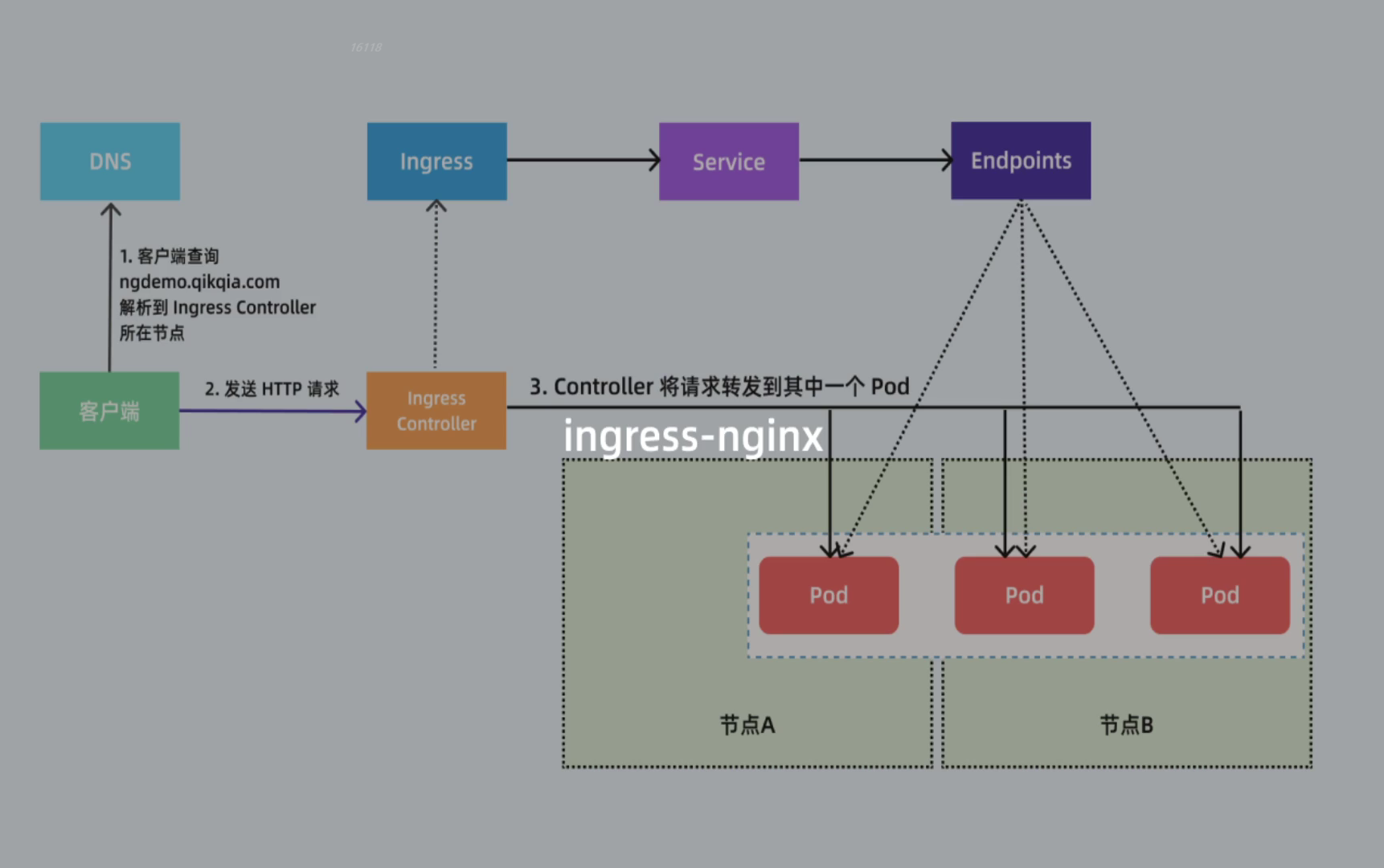

# 不过需要注意大部分Ingress控制器都不是直接转发到Service,而是只是通过Service来获取后端的Endpoints列表(因此这里的svc只起到了一个服务发现的作用),直接转发到Pod,这样可以减少网络跳转,提高性能!!!

- 直接创建上面的资源对象:

[root@master1 ingress-nginx]#kubectl apply -f first-ingress.yaml

deployment.apps/my-nginx created

service/my-nginx created

ingress.networking.k8s.io/my-nginx created

[root@master1 ingress-nginx]#kubectl get po

NAME READY STATUS RESTARTS AGE

my-nginx-7c4ff94949-hrxbh 1/1 Running 0 70s

[root@master1 ingress-nginx]#kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 177d

my-nginx ClusterIP 10.101.20.210 <none> 80/TCP 72s

记得在本地pc里配置下域名解析:

C:\WINDOWS\System32\drivers\etc

172.29.9.51 ngdemo.qikqiak.com

[root@master1 ingress-nginx]#kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

my-nginx nginx ngdemo.qikqiak.com 172.29.9.51 80 2m19s

在上面的 Ingress 资源对象中我们使用配置 ingressClassName: nginx 指定让我们安装的 ingress-nginx 这个控制器来处理我们的 Ingress 资源,配置的匹配路径类型为前缀的方式去匹配 /,将来自域名 ngdemo.qikqiak.com 的所有请求转发到 my-nginx 服务的后端 Endpoints 中去。

上面资源创建成功后,然后我们可以将域名 ngdemo.qikqiak.com 解析到 ingress-nginx 所在的边缘节点中的任意一个,当然也可以在本地 /etc/hosts 中添加对应的映射也可以,然后就可以通过域名进行访问了。

(本地测试这里直接配置了hosts,但线上的还一般就是用dns了)

- 验证

安装完成。😘

关于我

我的博客主旨:

- 排版美观,语言精炼;

- 文档即手册,步��骤明细,拒绝埋坑,提供源码;

- 本人实战文档都是亲测成功的,各位小伙伴在实际操作过程中如有什么疑问,可随时联系本人帮您解决问题,让我们一起进步!

🍀 微信二维码 x2675263825 (舍得), qq:2675263825。

🍀 微信公众号 《云原生架构师实战》

🍀 语雀

https://www.yuque.com/xyy-onlyone

🍀 csdn https://blog.csdn.net/weixin_39246554?spm=1010.2135.3001.5421

🍀 知乎 https://www.zhihu.com/people/foryouone

最后

好了,关于本次就到这里了,感谢大家阅读,最后祝大家生活快乐,每天��都过的有意义哦,我们下期见!

1